Lucy Strauss

INFORMATION:

about ︎

publications ︎

contact ︎

about ︎

publications ︎

contact ︎

night swim ︎

amass ︎

signal space ︎

improvisation ︎

tele-improvisation ︎

sonic response ︎

darkroom performances ︎

instance ︎

video archive ︎

project blog ︎

about

Lucy Strauss is a musician and researcher drawing together viola performance, DIY machine learning using audio and bioelectric signals, and interactive performance system design. With these practices, she seeks to deepen understanding of musicmaking with new and old technologies.

Lucy has performed in improvised and experimental music projects at Pony Books (Gothenburg), the TD Vancouver International Jazz Festival, Mixtophonics Festival, 8EAST Cultural Center & NOW Society (Vancouver), De Tanker (Amsterdam), Hundred Years Gallery (London), and Theatre Arts (Cape Town). She also enjoys contributing to interdisciplinary collaborations with fellow artists. Notably, she was the interaction designer for Denise Onen’s sounding the body as a sight at The Oscillations Exhibition 2024 (Akademie der Künste, Berlin). Also notably, Lucy has coded, composed and played for installations by artist Mia Thom at Everard Read Gallery, Act of Brutal Curation Gallery, and Eclectica Contemporary (Cape Town), as well as the 2022 Biennale de l'Art Africain Contemporain (Dakar). Lucy has presented workshops on composition (University of British Columbia), improvisation (Canadian Viola Society) and interactive music technology (Bowed Electrons Festival & Symposium).

Recent highlights include a ︎student artist residency at Tate in London and a ︎studio recording residency at at The Studio for Electroacoustic Music, Akademie der Künste in Berlin.

Lucy learnt to love improvising at the MusicDance021 artist residency (ZA). She further developed her improvisation practice at 8EAST & Now Society (CA). She learnt to compose music at the University of Cape Town (BMus) and perform viola at the University of British Columbia (MMus). For two years, Lucy was an Artist in Residence at the University of Johannesburg (ZA). She is currently a CHASE-funded PhD researcher in Arts & Computational Technology at Goldsmiths, University of London (UK).

Lucy is based in Gothenburg (SE) and London (UK).

PROJECTS

︎︎︎

PROJECTS

︎︎︎

Gained In Translation

words prompt sounds

sounds prompt words

photos by Robin Leverton

Gained In Translation is a music & text performance piece that I made in May 2025 for the Tech, Tea & Exchange residency at Tate.* The piece comprises different Machine Learning (ML) models strung together to form a recursive loop. The loop begins with human input: a short musical phrase that I play on viola in response to a text score.

text as score

failure to copy

cross-modal translation as meta-composition

what is gained in translation?

*The Tech, Tea & Exchange residency was supported by Anthropic and Gucci.

I provide a︎Github repository for this project.

examples

The piece is different each time it is performed, even if the initial audio is identical:

In the example videos, the generated text features references to battle and to music practices that I have no connection to. These references emerged as a result of the class labels of the pre-trained YAMNet model, and were likely further amplified by the recursive loop between the (also pre-trained) Stable Audio and Claude AI models featured in this piece. I would never have purposefully guided the piece towards violent themes and cultural appropriation. However, I have intentionally left these emergent themes in the piece to reveal nature of the models and their training datasets.

models & datasets

YAMNet - a sound classification model from TensorFlow

The model is trained to predict the most probable sound in an audio waveform. We can predict sounds from a pre-recorded sound file (as I do for the tech demo version of Gained in Translation) or from an incoming stream of audio (as I do in the Gained in Translaton performance).

dataset - "AudioSet consists of an expanding ontology of 632 audio event classes and a collection of 2,084,320 human-labeled 10-second sound clips drawn from YouTube videos" https://research.google.com/audioset/

The model is trained to predict the most probable sound in an audio waveform. We can predict sounds from a pre-recorded sound file (as I do for the tech demo version of Gained in Translation) or from an incoming stream of audio (as I do in the Gained in Translaton performance).

dataset - "AudioSet consists of an expanding ontology of 632 audio event classes and a collection of 2,084,320 human-labeled 10-second sound clips drawn from YouTube videos" https://research.google.com/audioset/

Claude 3.7 Sonnet - a large language model created by Anthropic

dataset - "Training data includes public internet information, non-public data from third-parties, contractor-generated data, and internally created data. When Anthropic's general purpose crawler obtains data by crawling public web pages, we follow industry practices with respect to robots.txt instructions that website operators use to indicate whether they permit crawling of the content on their sites. We did not train this model on any user prompt or output data submitted to us by users or customers." - https://www.anthropic.com/transparency

dataset - "Training data includes public internet information, non-public data from third-parties, contractor-generated data, and internally created data. When Anthropic's general purpose crawler obtains data by crawling public web pages, we follow industry practices with respect to robots.txt instructions that website operators use to indicate whether they permit crawling of the content on their sites. We did not train this model on any user prompt or output data submitted to us by users or customers." - https://www.anthropic.com/transparency

Stable Audio 2.0 (text & audio-to-audio)

dataset - "AudioSparx is an industry-leading music library and stock audio web site that brings together a world of music and sound effects from thousands of independent music artists, producers, bands and publishers in a hot online marketplace." https://www.audiosparx.com/

dataset - "AudioSparx is an industry-leading music library and stock audio web site that brings together a world of music and sound effects from thousands of independent music artists, producers, bands and publishers in a hot online marketplace." https://www.audiosparx.com/

REALMS

Realm of Tensors and Spruce is a new music project created and performed by Lucy Strauss. The project explores and extends the sonic possibilities of the viola with live acoustic playing and electroacoustics. With this palette of practices, she builds and transforms soundworlds entirely from viola audio. The resulting performance melds between improvised and composed structures.

Realms: an allusion to Lucy's compositional approach of building soundworlds.

Realms: an allusion to Lucy's compositional approach of building soundworlds.

@ Theatre Arts, Cape Town:

15 December 2024

For this show, I presented this work first in a performance setting, then as an interactive sound installation using a game controller to afford audience members the chance to explore and influence a soundworld generated in real-time.

@ Pony Books, Gothenburg:

11 October 2024

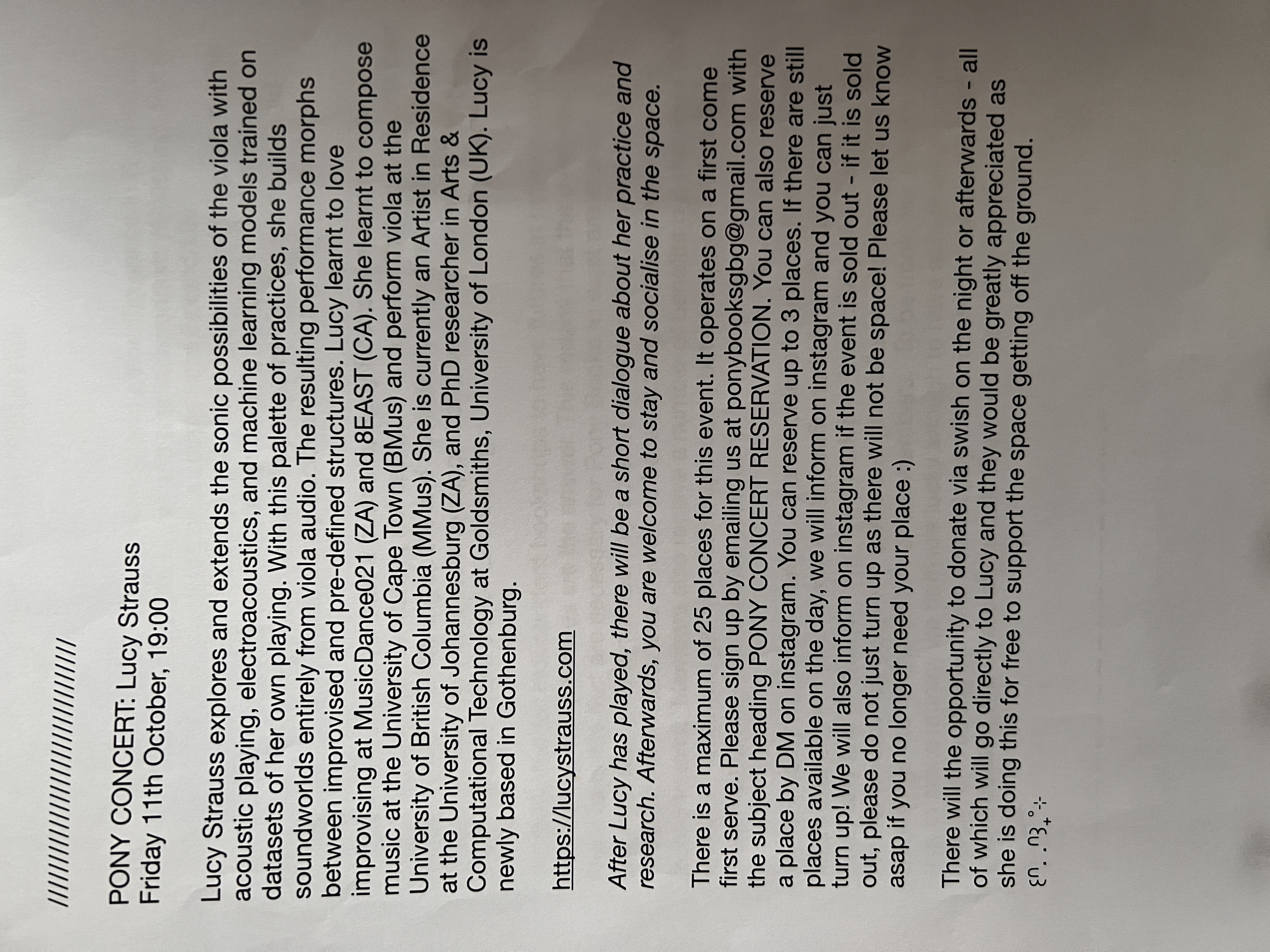

I performed an early version of Realms at Pony Books, a multi-lingual queer & arts bookshop and member space in Gothenburg, Sweden. The concert began at dusk on an Autumn Friday evening. I played the set, then we held a Q&A session.

The elevator pitch for this project is that every single sound comes from the live viola, from the acoustic sounds in the concert space, to electroacoustic process.

The promotional material for the Pony Books concert. Aside from the usual digital places, the concert information was shared on an actual piece of paper for people to find in the bookshop leading up to the event.![]()