Archival Project Blog

Welcome to my ARCHIVAL PROJECT BLOG! This is where I share the behind the scenes process for some of the projects I work on.

I’m sharing all this with you so that when you experience the final artworks, you can tell everyone around you all about the inside information you mysteriously know. This will definitely lead to an increase in overall coolness and score you trivia points.

You’re welcome! ︎

9 December 2021

In this post, I’m going to share a few more details on the gesture tracking system for Amass. I’ve mentioned in a few places already that the system uses the Python API for the Wave ring by Genki Instruments, but I haven’t yet explained what I did to get the features out of Python and into Max in a usable format.

One of the reasons that I’m so excited about the Wave ring is that Genki Instruments released a Python API this summer. HERE is the link to the Github repo. In the repo, you can find some examples that print a dictionary containing the current accelerometer and gyroscope states at regular intervals. There are a lot of features in the dictionary; including raw gyroscope data as well as the euler angles, which measure orientation relative to a starting point.

For my purposes with Amass, I needed to be able to select which features I want to work with, and receive a list of those features at a steady rate. Since I know Max better than Python, I decided to do most of the smoothing and scaling in Max. These aims lead to two main objectives:

1. Extracting specific features from the dictionary and structuring them as a clean list

2. Getting that list out of Python and into Max and Wekinator.

Initially, I just sent the entire dictionary from Python to Max via OSC, then unpacked the keys and values and selected the features I actually wanted to use in Max. This approach worked, but it proved to be cumbersome. Although I know Max better than Python, I had a hunch that with a little bit of love and attention, I could concoct an elegant solution with the feature selection constrained to Python.

The examples in the repo by Genki Instruments also use asyncio, which facilitates concurrent sending of sensor states from the Wave ring. In other words, the program can do more than one thing at the same time. That’s good. Although this is a very important concept for working with live sensor data, it results in the data package printing 3 times in quick succession, rather than once at every specified interval. As you might imagine, it was the opposite of useful for building my own features in Max MSP. For example, none of the averaging calculations were accurate.

For the (hopefully) elegant and useful program that I made based on the original examples in the API by Genki Instruments:

Find osc_genki.py in the examples folder of THIS Github repo.

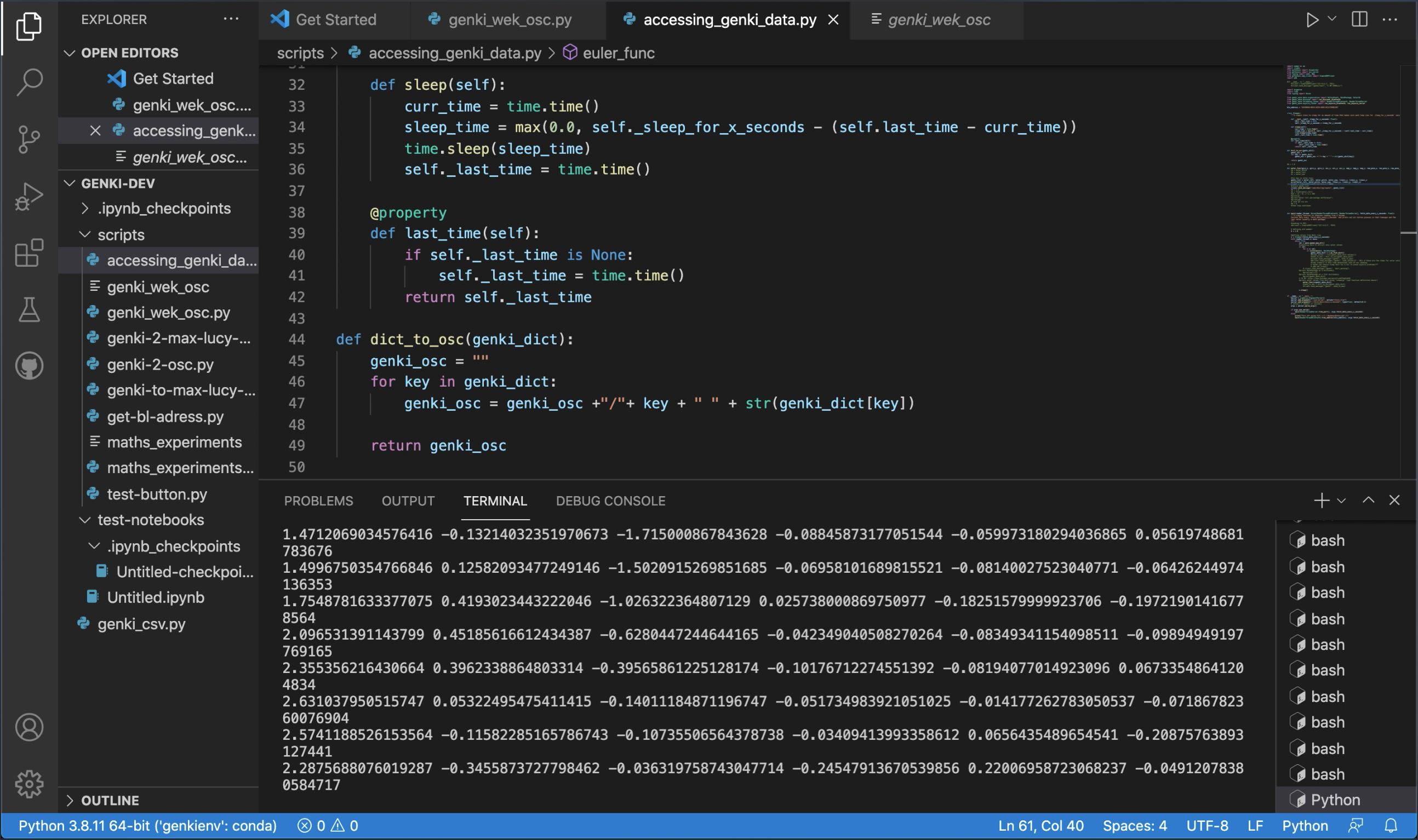

Here is a screenshot of the program that I eventually renamed as osc_genki.py. You can see that six features are printed to terminal. In Amass, I used three acceleration features and three gyroscope features. From the acceleration features, I also used a total acceleration feature in Max MSP. You are welcome to use it for your own projects with Wave.

︎

8 December 2021

I spent most of November buried in preparations for the Bowed Electrons Festival & Symposium, where I premiered a new composition, Amass. I also shared the behind the scenes process in my presentation: Research Through Design for Interactive Music Systems. The purpose of the presentation is to inspire my Music Technology peers to build interactivity into their own work; provide insights that are useful to fellow interactive music system designers; and increase understanding of interactivity in Music Technology, towards an enriched audience experience.

Bowed Electrons is hosted by the Music Technology department of the South African College of Music, University of Cape Town. I graduated from SACM with my BMus in Composition in 2018. I’ve kept up to date with Bowed Electrons since then and it was so lovely to be invited to contribute this year!

Click HERE to watch the presentation.

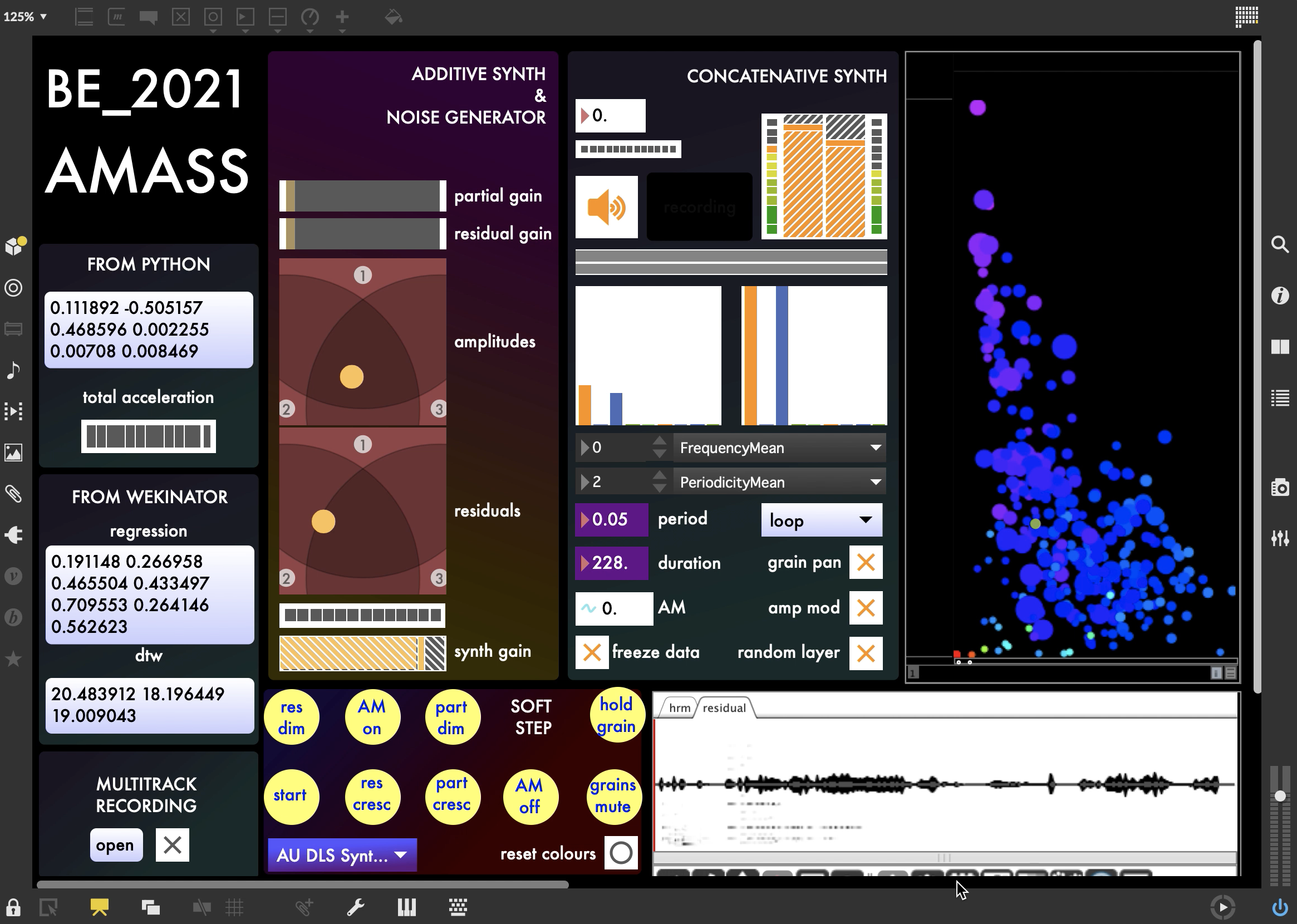

The #aesthetic Max MSP patch that I made for the performance:

I wanted to be able to perform without having to touch the computer, so it was really important to have only the essentials in presentation mode. My laptop is a bit old and doesn’t have the CPU to handle a lot of live objects that constantly update. I remembered the headache it caused when I was making instance in 2020, so I kept everything minimal and only included a few objects for visual feedback: just 5 meter~ objects.

In a nutshell, this is what happens in Amass:

Amass begins with an acoustic improvisation, while the incoming audio is captured and organized into a concatenative synthesizer according to sound features. Specific grains are then triggered and manipulated according to the orientation of the violist's bow hand. The interaction between gesture and sound builds until the violist triggers an additive synthesizer and noise generator, that is able to move seamlessly between the partials and residuals of three different improvisations on viola. These improvisations were collected during the composition process. The system recognizes specific gestures and scrubs through specific sections of the three captured improvisations depending on which gesture is detected, scrubbing at the same speed that the gesture is performed. At the same time, the violist blends between different timbral combinations of the synthesized partials and residuals from each improvisation, in a dynamic interaction between action and sound.

Here is the performance:

︎

3 September 2021

It has been a while since I launched this short blog with a single, perfect post. To my millions of devoted followers and fans that I definitely have, fear not! The reason for this prolonged and lonesome silence is not that I have nothing to share, but that I have been too busy creating wonderful things.

With this post, I present the making of Night Swim, a sight-specific installation by Mia Thom in collaboration with Claire Patrick and Lucy Strauss... that’s me! The installation comprises three large sculptures for people to lie on or interact with as they please. Each sculpture contains a speakerboard, playing a generative soundscape that I composed and developed in Max MSP.

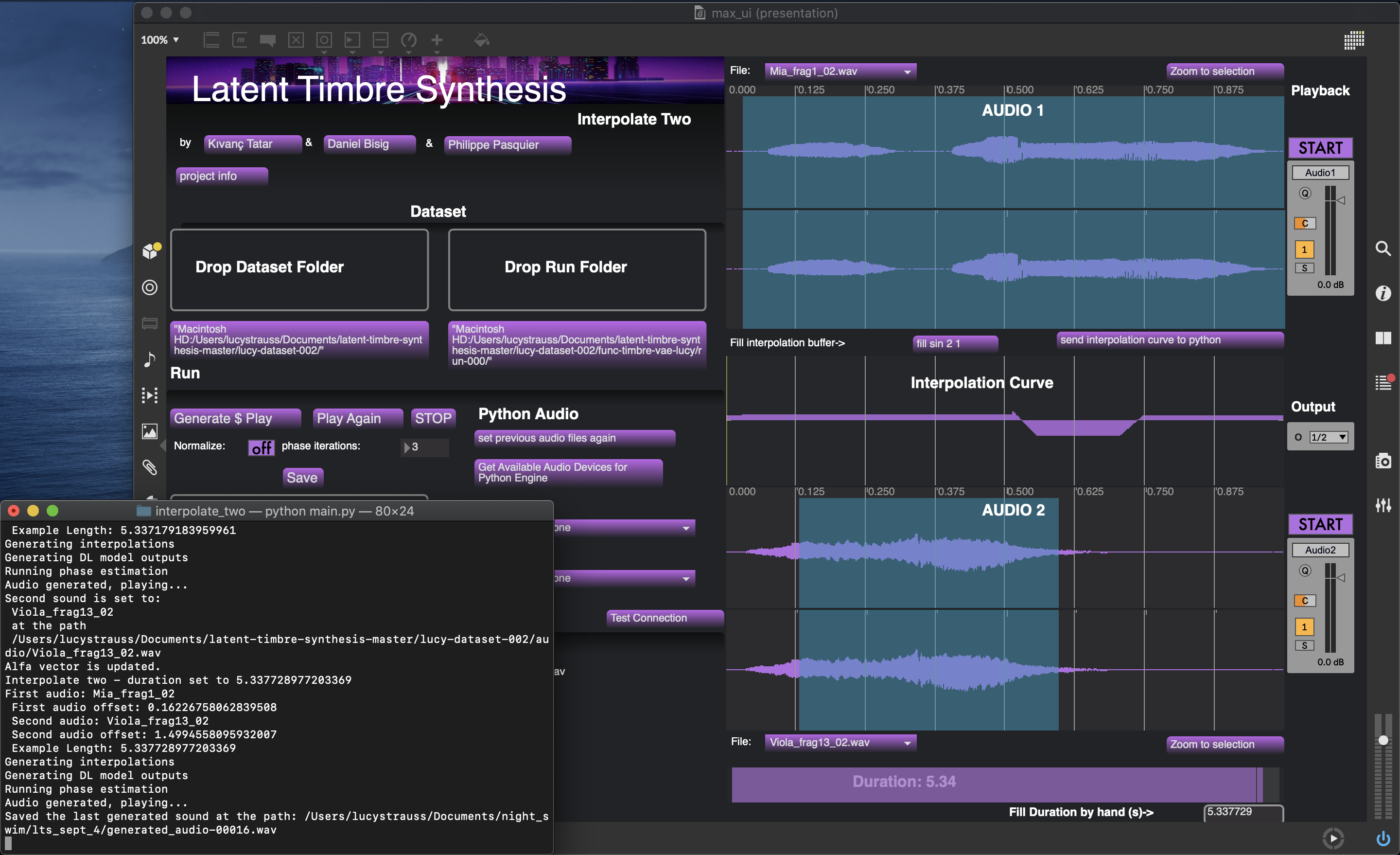

You can read more about the raw sounds that Mia and I created on the Night Swim project page of this website, but today I want to tell you about Latent Timbre Synthesis (LTS). LTS is a Deep Learning tool that I used to synthesize new sounds, by interpolating between a selection of two audio samples. Back in 2020, I took part in the qualitative study for LTS as a composer, to investigate how I could use LTS in my artistic practice. I’m so happy that I could use it for Night Swim! LTS is available for anyone to use open-source ︎

You might be thinking something like: “WHY did you use Deep Learning in this particular artwork, Lucy? Just to be extra?”

My answer is: “Well no! Although I do like to be extra on occasion, the use of LTS in Night Swim expresses themes of ambiguity and organic fluctuation. Sounds obscured and amalgamated into one by the forces of the ocean tides...”

The Latent Timbre Synthesis GUI:

The interpolation curve that you see in the image above was generated by fluctuations in geophysical ocean data. The synthesized sound resulting from this generation is made up of Mia’s voice singing a phrase that I composed, and me playing a different phrase that I composed on viola. According to the interpolation curve, the synthesized audio is pretty balanced throughout the whole generation, though there are some subtle fluctuations. The first half of the generated audio is closer to the timbre of Mia’s voice, but then there is a dip and the viola timbre takes over. At about two thirds of the way into the generation, the timbre pulls back towards Mia’s voice.

Now that I’ve told you all about how I used Mia’s voice to make new sounds, let me tell you about how this collaboration came to be:

My artistic relationship with Mia goes way back to April 2017, when we were both in the final year of our undergraduate degrees. Mia posted an open call for a composer for her final BFA exhibiton, I answered the call, and we made the first ever Darkroom Performance. Over the next 4 years, I worked with Mia on many different projects that were exhibited at galleries all around Cape Town. It has been so incredibly wonderful to be able to make a project together again for Night Swim, even though I’ve spent the last two years on literally the other side of the world!

Here Mia and I are lying on a sculpture together to test if it holds... a lot of kilograms (it does!) and here is some evidence that we did in fact get some work done that day:

Here is a picture of my DIY workstation in the Frankfurt airport, during a 12 hour layover. Unfortunately, this session was short-lived because there were hardly any working plug points ︎

︎

11 June 2021

Welcome to my telematic music-dance project with Makhanda based dance artist, Julia de Rosenworth. Our project doesn’t have a title just yet, but you can watch our creative, soma-design process unfold here. Then when you watch our first performance in a few months, you’ll feel so fancy because you will know what’s going on! Look at you, knowing all the inside jokes... ︎

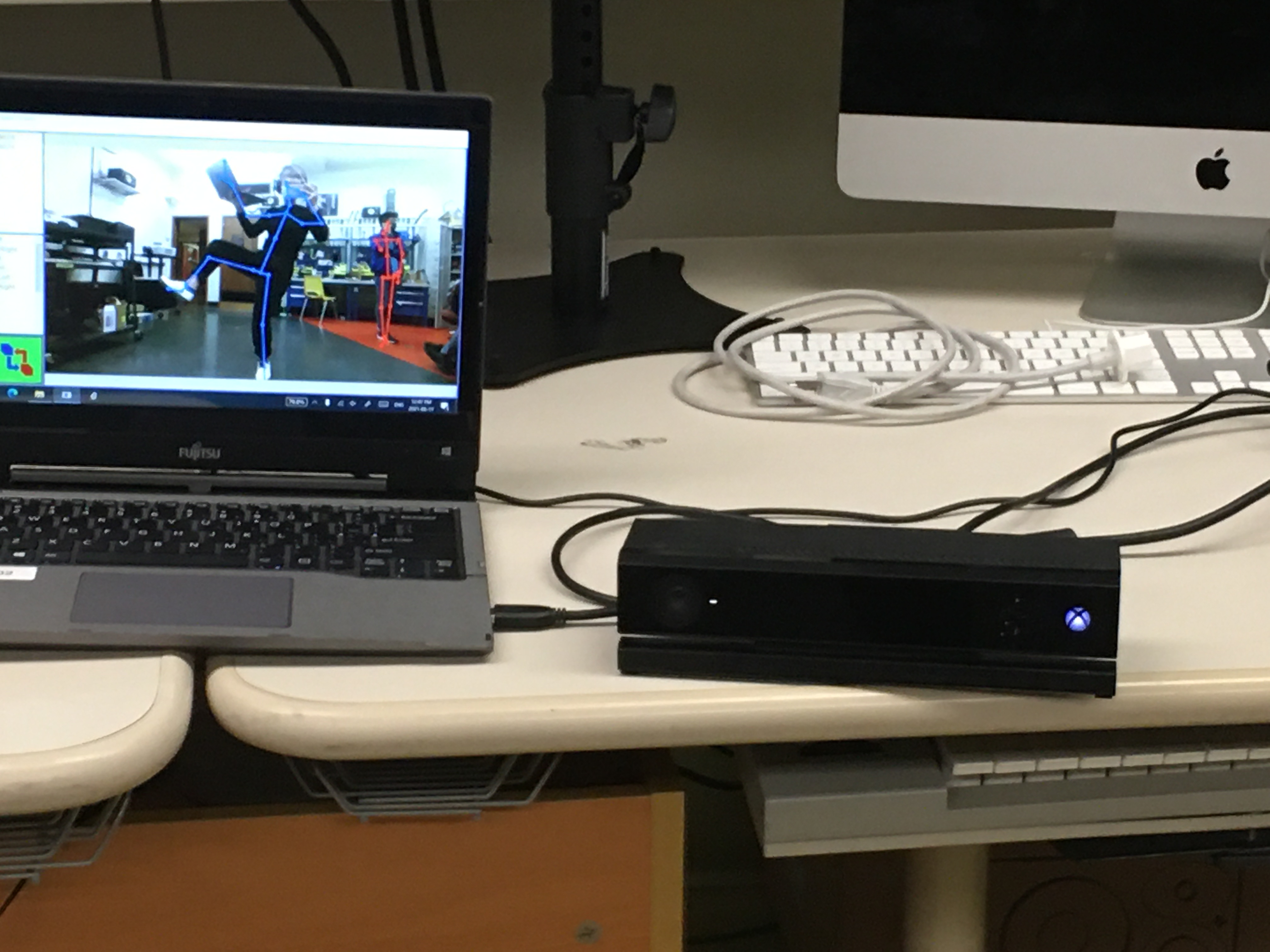

This morning, I got into the studio to do some work with the Kinect. I used my own body to refine the scaling of the gesture data, in preparation for the next tech rehearsal with Julia.

The defining features of today’s work were the balancing acts... an embodied interaction between human, laptop hardware and laptop software, if you will ︎

Once the data was workable, I made some good progress with the simple Machine Learning model we are using for this project in Max MSP. Here is a little tech rant. Skip over it for more fun jokes if it just sounds like “blah blah blah...”

First, I input gesture data into Max MSP, using the KiCASS system for gesture tracking. Then, the data is filtered using the [pipo mavrg] external.

Once the numbers are scaled and filtered, I record gesture data from a few specific poses into a MuBu track. At the same time, I record the corresponding parameter settings of a granular viola synth that I made. I train the [mubu.xmm] object on the data, so that it sets the synth parameters to specific settings, depending on the position of my body. Since [mubu.xmm] uses a regression Machine Learning model, it interpolates between the different poses, like it’s filling in the gaps. That way, it (hopefully!) always sounds like something!

Here are my makeshift moves, in preparation for Julia’s real moves. ︎

︎