PROJECTS

︎︎︎

PROJECTS

︎︎︎

Gained In Translation

words prompt sounds

sounds prompt words

photos by Robin Leverton

Gained In Translation is a music & text performance piece that I made in May 2025 for the Tech, Tea & Exchange residency at Tate.* The piece comprises different Machine Learning (ML) models strung together to form a recursive loop. The loop begins with human input: a short musical phrase that I play on viola in response to a text score.

text as score

failure to copy

cross-modal translation as meta-composition

what is gained in translation?

*The Tech, Tea & Exchange residency was supported by Anthropic and Gucci.

I provide a︎Github repository for this project.

examples

The piece is different each time it is performed, even if the initial audio is identical:

In the example videos, the generated text features references to battle and to music practices that I have no connection to. These references emerged as a result of the class labels of the pre-trained YAMNet model, and were likely further amplified by the recursive loop between the (also pre-trained) Stable Audio and Claude AI models featured in this piece. I would never have purposefully guided the piece towards violent themes and cultural appropriation. However, I have intentionally left these emergent themes in the piece to reveal nature of the models and their training datasets.

models & datasets

YAMNet - a sound classification model from TensorFlow

The model is trained to predict the most probable sound in an audio waveform. We can predict sounds from a pre-recorded sound file (as I do for the tech demo version of Gained in Translation) or from an incoming stream of audio (as I do in the Gained in Translaton performance).

dataset - "AudioSet consists of an expanding ontology of 632 audio event classes and a collection of 2,084,320 human-labeled 10-second sound clips drawn from YouTube videos" https://research.google.com/audioset/

The model is trained to predict the most probable sound in an audio waveform. We can predict sounds from a pre-recorded sound file (as I do for the tech demo version of Gained in Translation) or from an incoming stream of audio (as I do in the Gained in Translaton performance).

dataset - "AudioSet consists of an expanding ontology of 632 audio event classes and a collection of 2,084,320 human-labeled 10-second sound clips drawn from YouTube videos" https://research.google.com/audioset/

Claude 3.7 Sonnet - a large language model created by Anthropic

dataset - "Training data includes public internet information, non-public data from third-parties, contractor-generated data, and internally created data. When Anthropic's general purpose crawler obtains data by crawling public web pages, we follow industry practices with respect to robots.txt instructions that website operators use to indicate whether they permit crawling of the content on their sites. We did not train this model on any user prompt or output data submitted to us by users or customers." - https://www.anthropic.com/transparency

dataset - "Training data includes public internet information, non-public data from third-parties, contractor-generated data, and internally created data. When Anthropic's general purpose crawler obtains data by crawling public web pages, we follow industry practices with respect to robots.txt instructions that website operators use to indicate whether they permit crawling of the content on their sites. We did not train this model on any user prompt or output data submitted to us by users or customers." - https://www.anthropic.com/transparency

Stable Audio 2.0 (text & audio-to-audio)

dataset - "AudioSparx is an industry-leading music library and stock audio web site that brings together a world of music and sound effects from thousands of independent music artists, producers, bands and publishers in a hot online marketplace." https://www.audiosparx.com/

dataset - "AudioSparx is an industry-leading music library and stock audio web site that brings together a world of music and sound effects from thousands of independent music artists, producers, bands and publishers in a hot online marketplace." https://www.audiosparx.com/

REALMS

Realm of Tensors and Spruce is a new music project created and performed by Lucy Strauss. The project explores and extends the sonic possibilities of the viola with live acoustic playing and electroacoustics. With this palette of practices, she builds and transforms soundworlds entirely from viola audio. The resulting performance melds between improvised and composed structures.

Realms: an allusion to Lucy's compositional approach of building soundworlds.

Realms: an allusion to Lucy's compositional approach of building soundworlds.

@ Theatre Arts, Cape Town:

15 December 2024

For this show, I presented this work first in a performance setting, then as an interactive sound installation using a game controller to afford audience members the chance to explore and influence a soundworld generated in real-time.

@ Pony Books, Gothenburg:

11 October 2024

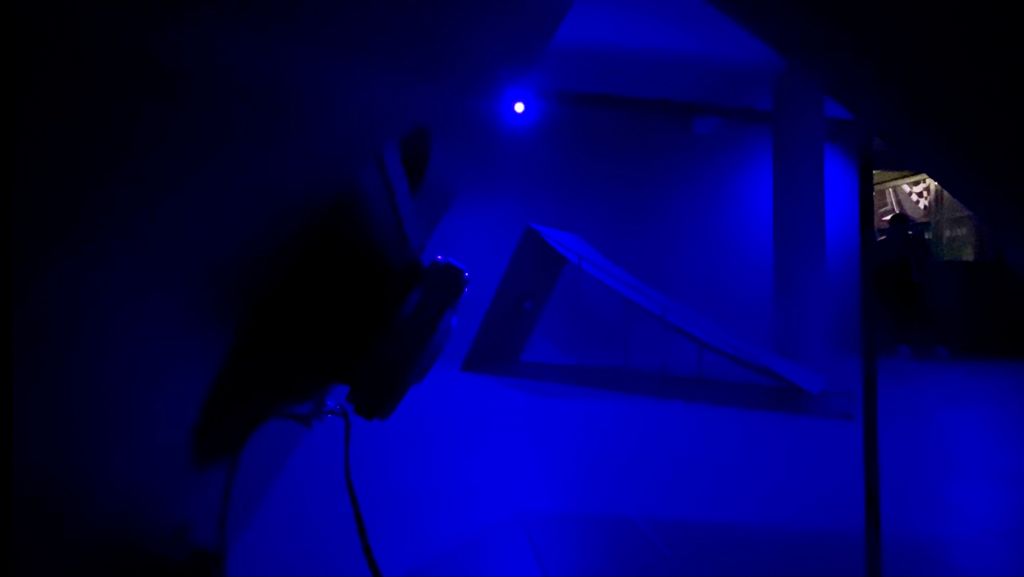

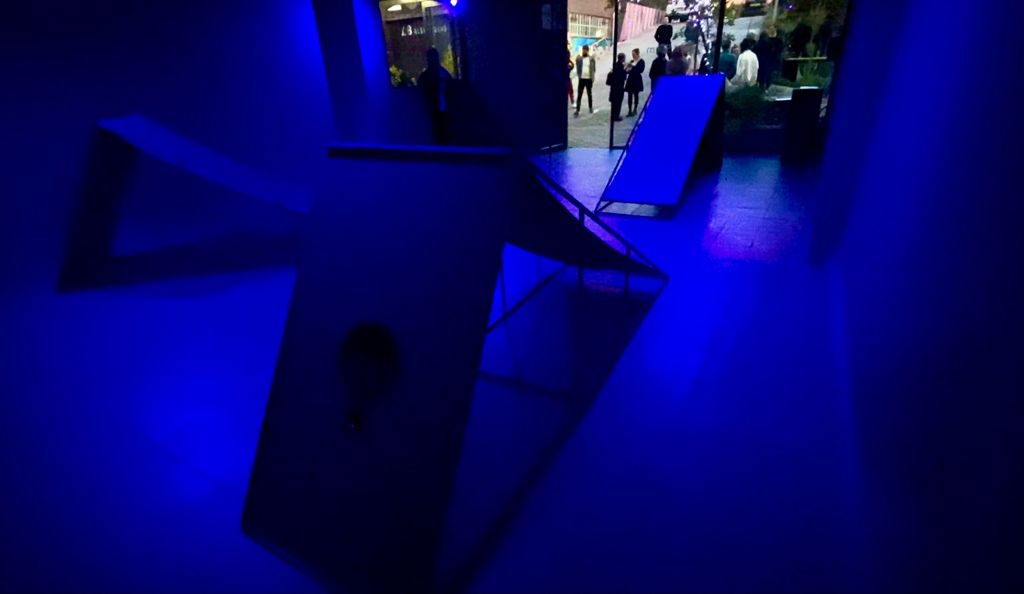

I performed an early version of Realms at Pony Books, a multi-lingual queer & arts bookshop and member space in Gothenburg, Sweden. The concert began at dusk on an Autumn Friday evening. I played the set, then we held a Q&A session.

The elevator pitch for this project is that every single sound comes from the live viola, from the acoustic sounds in the concert space, to electroacoustic process.

The promotional material for the Pony Books concert. Aside from the usual digital places, the concert information was shared on an actual piece of paper for people to find in the bookshop leading up to the event.![]()

︎︎︎

Night Swim

Check out my ︎Project Blog page on this website for some behind the scenes content on the making of Night Swim.

Night Swim is a sight-specific installation work by Mia Thom in collaboration with Lucy Strauss and Clare Patrick. The installation was premiered at the Art of Brutal Curation gallery (Cape Town, August - September 2021) and was selected for installation at the Everard Read gallery group show (Cape Town, December 2021).

![]()

Plunging participants into a monochromatic, blue environment, this experimental installation comprises of three large scale sculptures which function as speakers to lie on. Composed and coded by Lucy Strauss, these forms emit a soundscape of strings, room tone and voice, transformed by geophyscial data from the South Atlantic ocean.

![]()

The sonic materials of the soundscape comprise audio captured from broken violin, viola, cello and contrabass strings; composed fragments for voice and viola based on the acoustic modes of the installation space; and viola improvisations. The collected acoustic materials are placed in an environment constructed from geophysical data from the South Atlantic ocean. Machine learning algorithms in Wekinator trigger the collected audio materials and perform transformations in the time and frequency domain, in accordance with ocean’s fluctuations and developments in time.

![]()

Night Swim also utilizes Latent Timbre Synthesis (LTS), a new audio synthesis method using Deep Learning. We used LTS to interpolate between the timbres of the raw sonic materials, and set the interpolation curve according to fluctuations in the ocean data. This curve determines how much each sound has an effect on the resulting synthesized sound. A person experiencing Night Swim will hear the raw sonic materials, as well as synthesized audio that lies somewhere between these materials. For example, one could hear a sound that exists somewhere between two different human voices; a human voice and a viola; or a viola and a broken bass string.

![]()

Night Swim

Check out my ︎Project Blog page on this website for some behind the scenes content on the making of Night Swim.Night Swim is a sight-specific installation work by Mia Thom in collaboration with Lucy Strauss and Clare Patrick. The installation was premiered at the Art of Brutal Curation gallery (Cape Town, August - September 2021) and was selected for installation at the Everard Read gallery group show (Cape Town, December 2021).

Plunging participants into a monochromatic, blue environment, this experimental installation comprises of three large scale sculptures which function as speakers to lie on. Composed and coded by Lucy Strauss, these forms emit a soundscape of strings, room tone and voice, transformed by geophyscial data from the South Atlantic ocean.

The sonic materials of the soundscape comprise audio captured from broken violin, viola, cello and contrabass strings; composed fragments for voice and viola based on the acoustic modes of the installation space; and viola improvisations. The collected acoustic materials are placed in an environment constructed from geophysical data from the South Atlantic ocean. Machine learning algorithms in Wekinator trigger the collected audio materials and perform transformations in the time and frequency domain, in accordance with ocean’s fluctuations and developments in time.

Night Swim also utilizes Latent Timbre Synthesis (LTS), a new audio synthesis method using Deep Learning. We used LTS to interpolate between the timbres of the raw sonic materials, and set the interpolation curve according to fluctuations in the ocean data. This curve determines how much each sound has an effect on the resulting synthesized sound. A person experiencing Night Swim will hear the raw sonic materials, as well as synthesized audio that lies somewhere between these materials. For example, one could hear a sound that exists somewhere between two different human voices; a human voice and a viola; or a viola and a broken bass string.

Signal Space

Signal Space is an electroacoustic piece that I composed for the 2023 Bowed Electrons Festival & Symposium. The piece functions as a composition study where I apply the concept of 'data as design material' in music composition. This concept comes from soma-design methodology, where the design process is guided by bodily practices and grounded in a designer's lived experience. In addition to being a complete work on its own, this piece informs the design process for an interactive music system that I am making. In this way, I use composition as a design method.

In this piece, I use data recordings of the electrical activity from of own muscle contractions (electromyographic) and heartbeat (electrocardiographic). The composition comprises two sections: in the first section, I use this bioelectric data as sound material. In the second, the data functions as control signals applied to pre-recorded samples of viola audio.

The piece begins with a raw sonified electromyographic signal. We hear the signal as it is with very little filtering. Gradually, a swishing sound reveals itself. This is the same raw signal, but it has been analysed and separated into different frequency bands. We can now understand the raw signal as a rich combination of many bioelectric impulses (known in neuroscience as action-potentials), shooting through multiple muscle fibers all at once. We then hear the most prominent frequencies within each ‘swish’ as a collection of clicks and pops.

These signals then introduce glitches in short loops of viola samples through continuous controll of the loop start and end points (in the audio sample tracks). These glitches occasionally create new synthesized pitches. Towards the end of the piece, my heartbeat grounds the music in contrast to the chaos of the glitching loops. Finally, only my heartbeat remains.

By delving into data as design material I hope to deepen understandings of the underlying bodily processes inside our bodies as living beings. By doing so through music, we can gain nuanced, tacit understandings that may difficult to articulate with language or quantitative methods.